ABOUT OUR SURVEY RESEARCH INNOVATION

Written by James Martherus, Ph.D.

May 3, 2022 at 7:00 pm ET

As survey researchers, one of the most important tasks we face is ensuring that our data accurately represents the population we are studying. Pursuing that goal, many surveys now include questions that allow respondents to describe their gender identity rather than their biological sex. For example, in 2020, Pew Research Center began using a three-category gender identity question, and others in the industry report that as of 2022, the majority of online surveys use a gender question with three or more response categories.

The challenges with weighting survey data for non-binary gender questions

While the industry appears to have settled on measuring gender identity more inclusively, the question of how to construct survey weights with these non-binary gender questions is still very much an open one. There are several overlapping problems.

Survey researchers have not come to a consensus

First, despite consensus among many modern researchers on the need to update the way we measure gender identity, there is no consensus on what that updated measurement should look like. There are active debates about whether gender should be measured in one question or several, what the specific categories should be and how each category should be defined. Complicating things even further, the language used to describe gender identity continues to evolve, meaning question-wording that makes sense today may not make sense in the future.¹

There’s a lack of non-binary population data

Second, there is a lack of high-quality population data describing the non-binary population. Government surveys conducted by the US Census Bureau that researchers routinely use for constructing weighting targets, such as the Current Population Survey (CPS) and the American Community Survey (ACS), still use binary sex questions. Until these surveys change the way they measure gender identity, survey researchers will need to use creative weighting solutions.

In this analysis, we explore some potential solutions for weighting survey data with non-binary gender questions and attempt to validate those solutions.

How to weight survey data for non-binary gender questions

There are at least three broad categories of solutions for dealing with non-binary respondents in the absence of reliable population data: removal, aggregation and assignment.

Removal

Removal refers to excluding non-binary respondents; either by dropping those responses completely or by excluding them from weighting. In the latter case, these responses can still be used in analysis by assigning them a weight of 1.

Aggregation

Aggregation refers to collapsing gender categories. For example, we might collapse the “woman” and “not listed” categories and weight on “male” and “not male” categories. This is common practice when variables with many categories are used in weighting, particularly when some categories are very small. For example, many researchers will collapse a race question with 12 categories into one with only three or four broader categories.

Assignment

Assignment refers to assigning non-binary respondents to one of the two binary gender categories for weighting purposes. This could be done through random assignment, imputation or by some other method.

Which weighting solutions should survey researchers use?

Others have made thoughtful arguments about selecting a solution to this problem. In their excellent article, Kennedy et al. (2022) suggest considering ethics, accuracy, practicality and flexibility when evaluating potential solutions. These are all important considerations, and researchers should make their own decisions about which solution reaches the right balance.

In this analysis we will focus on accuracy; specifically, how well different weighting methods allow us to represent the non-binary population among the broader population.

To measure accuracy, we first searched for high-quality surveys of the non-binary population to use as a benchmark. We considered a number of surveys, including NIH’s PATH Study, the TransPop Study and the Household Pulse Survey, all of which measure gender identity beyond the two traditional response categories. We ultimately selected the Household Pulse Survey because it is recent, uses a high-quality sampling procedure and the Census may adopt its question-wording in the future.

Next, we weighted data from Morning Consult’s Intelligence survey using a variety of methods:

- Removal: Nonbinary respondents were excluded during weighting and assigned a weight of 1.

- Coin Flip: Nonbinary respondents were randomly assigned to the “man” or “woman” categories for weighting purposes.

- Imputation: Nonbinary respondents were assigned to the “man” or “woman” categories using an imputation model. The imputation model was trained on Household Pulse data and used age, race, ethnicity, education and census region to predict gender at birth for each respondent.

- Probabilistic Assignment: We calculated the probability that a given respondent was born Male conditional on age, education and race using Household Pulse data. We then used these probabilities to assign binary sex given each respondent’s demographic profile.

- Aggregation: Respondents who selected “woman” and “not listed” were collapsed into a single category.

We then benchmarked the weighted Morning Consult data against Household Pulse estimates for a variety of variables among the non-binary population. Following the framework used in Pew benchmarking studies, we calculated the difference between the population benchmark and the weighted sample distribution for each category of each question. We then calculated question-level bias by averaging category-level biases and a single summary of overall bias by averaging the question-level biases.

Results of each approach

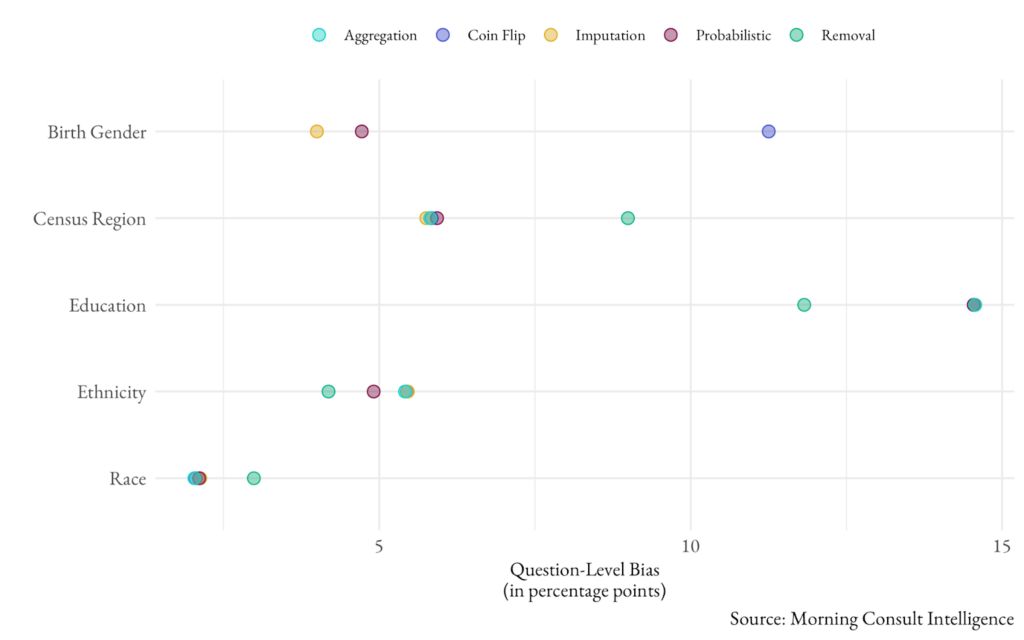

The following chart shows the question-level bias for a variety of questions using each weighting method. There is no clear winner. The aggregation, imputation, coin flip and probabilistic methods perform very similarly for all variables except sex assigned at birth, where the coin flip method significantly underperforms the other methods. The removal method performed noticeably better than other methods on some variables but noticeably worse on others. Overall bias numbers are also very close, with probabilistic assignment and imputation narrowly outperforming other methods.

| Aggregation | Coin Flip | Imputation | Probabilistic | Removal |

|---|---|---|---|---|

| .069 | .078 | .064 | .064 | .07 |

Table 1: Overall Bias by Method

This suggests that the choice of weighting method doesn’t matter much in terms of accurately representing the non-binary population. Instead, survey researchers should consider the ethics, practicality, and flexibility of each solution. At Morning Consult, we ultimately chose to use the probabilistic method as our standard approach because it performed well in our benchmarking exercise and it is easier to implement in-survey than a more complex imputation model. In some custom studies, we use other methods when they make more sense.

Figure 1: Question-Level Bias by Method

Reported Bias by Method

The future of weighting survey data for non-binary gender questions

Weighting survey data using non-binary gender questions remains a difficult problem. As our analysis shows, no solution is perfect.

There are some signs that this problem may be a temporary one. The Biden administration recently requested $10 million to research adding questions about sexuality and gender identity to the American Community Survey. If these questions are added in the future, we can simply weight to three or more categories rather than two. In a recent article in PS: Political Science and Politics, Brian Urlacher shows evidence that shifting to this approach is unlikely to negatively impact survey weighting.

That said, changes to Census questionnaires would not end debates on the appropriate measurement of gender identity, how to properly align and combine different categories and so on. These conversations will likely continue and may become even more complex as we evaluate how to measure gender identity outside the United States. At Morning Consult, we continue to actively study proper measurement and approaches to weighting the non-binary population — our respondents deserve it.

¹ For simplicity, in this post we’ll focus on the simplest solution; a single question with three categories. Specifically, our question asks respondents, “Which of the following best describes your gender identity?” with three response options: “Man,” “Woman,” and “Not listed.” Respondents who select “Not listed” can describe their gender identity in an open-end text box.